9. Search Engine Tools and Services for Webmasters

While the SEO community is significantly involved in innovation and creation of more and more tools for use by SEO professionals, businesses and organizations looking to self-optimize, a large variety of tools and services are provided by search engines themselves to facilitate webmasters in their SEO endeavors. Google, for example, has a whole range of tools, analytics and advisory content specifically for webmasters looking to enhance their relationships with the search engine giant and optimize their websites in line with Google’s recommendations. After all, who understands search engine optimization better than search engines themselves?

Search engine protocols

Let us look at a detailed list of search engine protocols:

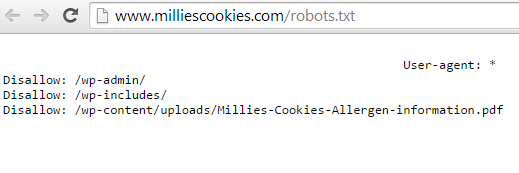

The robots exclusion protocol

This protocol functions through the robots.txt file, which is usually found in the root directory of a website (e.g. www.milliescookies.com/robots.txt). It provides directives to web crawlers and spiders (that are automated) regarding a range of issues such as guiding bots as to where they can find sitemap data, what areas of a website are off limits or disallowed and should not be crawled, and give parameters for crawl delay.

Here is a list of commands that can be used to instruct the robots:

Here is a list of commands that can be used to instruct the robots:

DisallowIt stops the robots from going near certain pages or folders on a website.

Crawl delayIt provides robots the rate (in milliseconds) at which they should crawl pages on a server.

SitemapShows robots where they can find the sitemap and files related to it.

Beware: While most robots are nice and compliant, there are some rogue robots designed with mal intentions that do not follow the protocol and thus will not adhere to the directives found in robots.txt files. Such robots can be used by some horrible individuals to steal private information and access content not meant for them. To safeguard against this, it is best not to leave the address of administrative sections or private areas of an otherwise public website in the robots.txt file. Alternatively, the Meta robots tag can be used for such pages to instruct search engines that they should not crawl these pages. You can find out more about the meta robots tag further in this chapter.

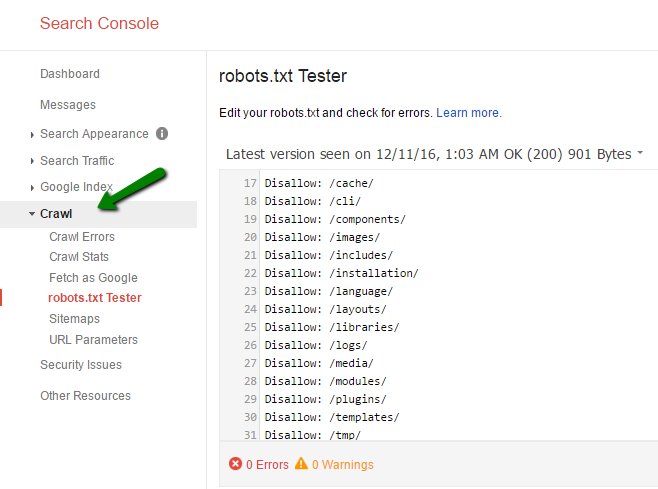

Google Search Console can be used to access and analyze search engine protocols of your website.

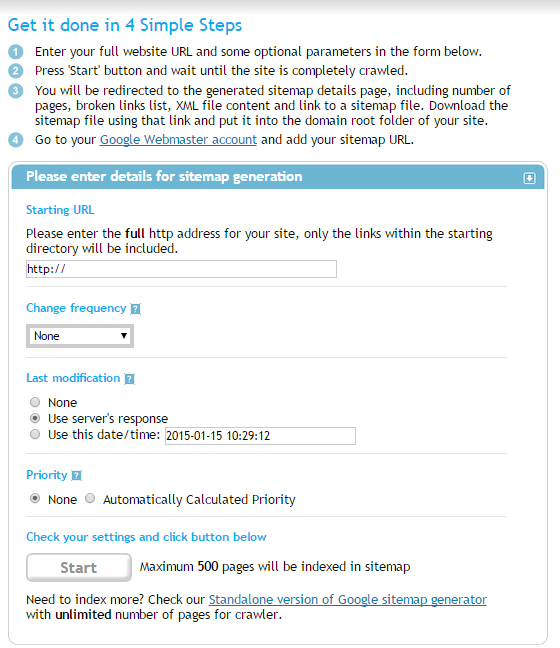

Sitemap

Sitemap can be thought of as treasure map that guides search engines on the best way to crawl your website. Sitemap aids search engines in locating and classifying the content on a website which, embarrassingly enough, they would have had a tough time doing by themselves. There are a variety of formats used to keep sitemaps in and they can show the way to different forms of content, whether they are audio-visual, mobile version files or news.

A tool called XML-Sitemaps.com can be used to create your own sitemap in a friendly and easy manner.

There are three formats in which sitemaps usually exist:

There are three formats in which sitemaps usually exist:

- RSS

There is a somewhat amusing debate over whether RSS should stand for Really Simple Syndication or Rich Site Summary. It is an XML dialect and quite convenient in terms of maintenance since they can be coded to have automated update properties with the addition of new content. However, a downside to RSS is that their management is difficult when compared with other formats, due to those very updating qualities.

- XML

XML stands for Extendable Markup Language, but at the time of creation, someone decided that XML sounds cooler than EML, so the initials just stuck. XML is the recommended format by most search engines and website design gurus and it is no coincidence that it is also the most commonly used format. Being significantly more passable by search engines, it can be created by a large number of sitemap generators. It also provides the best granular control of parameters for a page.

However, the downside of that very last quality mentioned, is that the file sizes for XML sitemaps are usually quite heavy in comparison to other formats.

- .TXT File

The .txt format is miraculously easy to use and uses one URL for each line and goes up to 50,000 lines. Sadly though, it does not allow the adding of meta elements to pages.

Meta bots

You can use the meta robots tag to provide instructions for search engine robots that apply to one page at a time. This tag is included in the head area of the HTML file.

Here is an example of a meta tag for search engine bots:

Welcome Cheetah Lovers

The above tag instructs spiders and other bots not to index this page and also not to follow the links found on this page. You can find out more about the types of meta tags above in the section on meta tags found in Chapter 3.

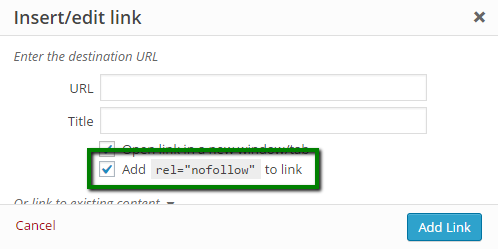

The nofollow attribute

You may remember the discussion on nofollow links in Chapter 3. You can go back for a detailed study of the rel=”nofollow” attribute and its uses, to the entire section dedicated to their discussion. To summarize them here, we can say that nofollow links allow you to link to a page without passing on any link juice and thus your vote or approval for the benefit of search engines. Although search engines will abide by your wishes not to have those links be given value, they might still follow them for their own reasons of discovery – to unearth new areas of the internet.

The canonical tag

It is possible to end up with any number of different URLs that lead to identical pages of identical content. This may seem like not that big of a deal, but it has unhelpful repercussions for site owners and SEOs looking to enhance ratings and page value. This is due to the very simple reason, as discussed earlier, that search engines are not yet all that smart as we would like them to be and they might read the following as four pages rather than one, leading to a devaluation of the content divided by four and a lowering of rankings. Think of it as juice being divided into four glasses instead of one big mug.

Is this one page or four different ones:

- http://www.notarealwebsite.com

- http://notarealwebsite.com

- http://www.notarealwebsite.com/default.asp

- http://notarealwebsite.com/default.asp

The canonical tag is used to inform search engines about which one to count as the ‘authoritative’ one for the purpose of results. This makes the search engine understand that they are all versions of the same page and only one URL should be counted for ranking purposes while the other should be assimilated into it.

Search engine myths and misconceptions

Let us be honest, we have all heard our fair share of SEO legends, which even after being debunked and proven untrue, still linger with doubts in our minds. Here is a collection of a few to help you separate fallacies from facts.

Using keywords like stuffing turkeys

This is one of the oldest and most persistent fallacies of the SEO world and seems to be invincible. You might recall seeing pages that may look something like this:

“Welcome to McSmith’s Amazing Spicy Chicken, where you’ll find the best Amazing Spicy Chicken that you’ve ever had. If you like Amazing Spicy Chicken, then McSmith’s Amazing Spicy Chicken is the only place for you to enjoy Amazing Spicy Chicken. McSmith’s – Amazing Spicy Chicken for the whole family”

Shoddy SEO work like that will not only make you lose your appetite but also make you never want to see Amazing Spicy Chicken ever again in your life. The myth behind this sad SEO technique is that stuffing a page, its title, anchor text and prominent content with as much keywords as possible is the magical key to getting top rankings on search engines. And unfortunately, a lot SEOs still think it is true and there are SEO tools still out there that emphasize the importance of keyword density and its use by search engine algorithms. This is flat out false.

Search engines actually admire keywords being used with intelligence, moderation and relevance. Do not waste your time calculating mathematical formulae and counting keywords. The only thing you will achieve is annoying visitors and looking like a spammer.

Improving organic results with paid results

This is pure fiction. It was never possible and it will never be. Even companies, which might be spending millions in search engine advertising, still have to battle over organic results and they receive no extra support or the slightest nudge in rankings when it comes to organic results, despite using paid results.

Google, Yahoo! and Bing, all exercise strong separation between departments to safeguard against this kind of crossover and risk the legitimacy of the whole search engine machinery. If an SEO tells you they can help you perform this ‘miracle’, move very slowly towards the nearest exit and then run.

The meta tag myths

Okay, this one we will admit used to be true and did work quite well for a while, but it has not been part of the rankings equation for a considerable period of time. A long time ago, search engines used to allow you to use the meta keyword tag to insert relevant keywords from your content so that when a user’s keywords matched yours, you would automatically come up in the query.

However, the same class of people who helped the rise of myth number one, used this SEO method to the limit of a spamming overdose and it did not take long for the search engines to regret what they had done and take this method off the algorithms. So be warned, once and for all. This method does not work anymore. Anyone who tells you that SEO is really about meta tagging, is still living in the previous decade.

Measuring and tracking success

It is said to be a universal rule of management sciences that whatever is measurable, only that is possible to change. Although not true enough to be an exclusionary statement, it does apply greatly to the field of search engine optimization. Successful SEOs are known to practice strong tracking and regard measurement to play a pivotal role in success and creating better strategies.

Here are a few metrics you will want to measure and keep regular track of:

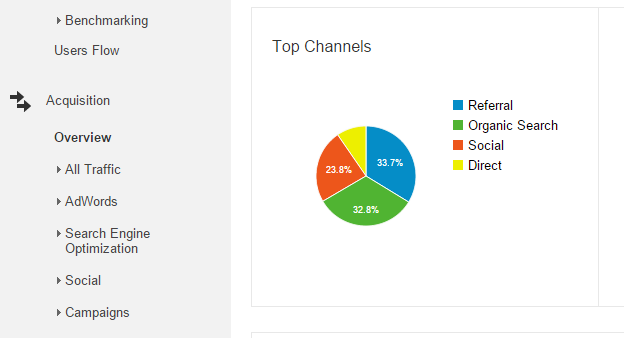

1. Search engine share of traffic

It is crucial to be mindful of what source of traffic has contributed how much to the traffic on your website on a monthly basis. These sources are usually classified into four groups based on where the traffic originated:

- Direct navigation

These are people who just typed in your URL and landed on your homepage, or those who had kept you in their bookmarks for example or have been referred by a friend on chat or email. The referrals through emails are not trackable.

- Referral traffic

These are the visitors who have come through some promotional content, through some campaign or from links found all over the web. These are very much trackable.

- Search engine traffic (also called organic reach)

This is the classic query traffic that is sent by search engines as a result of searches being made which bring you up on the results page and the user clicks through to your URL.

- Social traffic

This part of traffic includes the visits originating on social networks, and it allows you to measure the benefits of your efforts on social media in terms of visits and conversions.

Being aware of figures and percentages allow you to identify your weaknesses and strengths, to understand your increases and decreases in traffic and its sources and to pick up patterns in your performance that may be illegible if you were not looking at the bigger picture with segmentation.

Being aware of figures and percentages allow you to identify your weaknesses and strengths, to understand your increases and decreases in traffic and its sources and to pick up patterns in your performance that may be illegible if you were not looking at the bigger picture with segmentation.

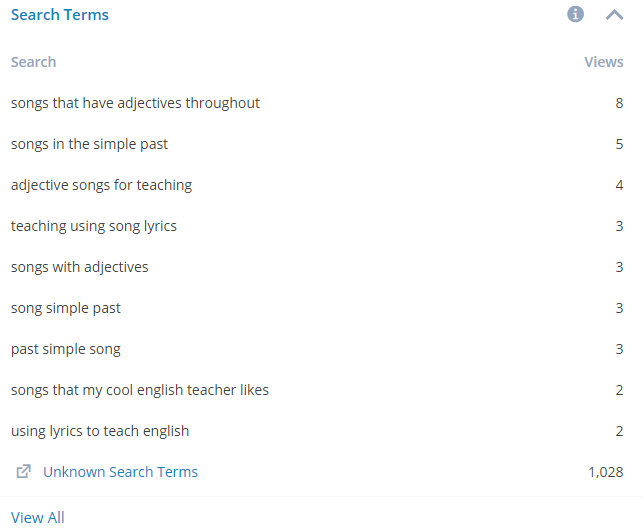

2. Referrals due to certain keywords or phrases in particular

It is extremely essential to be aware of which keywords are the magic words bringing in the most traffic, and also which are the ones not performing so well. You may be under-optimized for keywords that have a lot of traffic potential and are already great contributors to traffic. Remember, if your website was a song, keywords would be the catchy bits that everyone remembers, the memorable parts of the lyrics or melody. So it is highly important for you to have a clear picture of which keywords are doing what for your website. The analytics of any website are incomplete without a regular tracking of the performance of its keywords. And fortunately enough, there are a lot of tools available to aid you in keyword metrics.

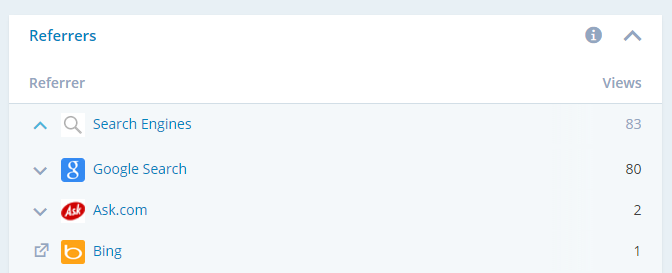

3. Referrals by specific search engines

It is crucial to measure how your website is doing in relation to specific search engines, not just how your site is doing with search engines generally. Let us look at a few reasons why you need to have a picture of your relationship with specific search engines:

- Comparing market share against performance

It allows you to analyze the traffic contribution of specific search engines in line with their market share. Different search engines will do better in different search categories or fields. For example, Google will do better in areas, which are more characteristic of a younger, more technology and internet-literate population, as opposed to fields like history or sports.

- Understanding the data in visibility graphs

In the event of a dive in search traffic, if you have the capacity to keep separate measurements of relative and exact contributions from specific search engines, you will be in a better position to troubleshoot the problem. For example, if the fall in traffic is consistent across the search engines, then the issue is probably one of access rather than one of penalties, in which case there would be a greater fall in Google’s contribution than the others.

- Exploring strategic worth

Since different search engines respond differently to optimization efforts, it is easier to have a more effective and focused strategy in play if you can identify which of your optimization techniques or tactics are performing well for which engine. E.g. On-page optimization and keyword-centered methods deliver more significant results on Bing and Yahoo!, as compared to Google. So you will know what you can do to raise rankings and attract traffic from specific search engines and what you might be doing wrong.

SEO tools to use

Apart from the tools we have mentioned earlier, there are several other tools that are worth mentioning, as they can be of great assistance with different SEO tasks, as well as with measuring the success of your strategy.

The most important tools are Google Search Console (Bing has the same tool designed for webmasters interested in optimizing websites for Bing and Yahoo!) and Google Analytics.

The main benefits of these tools include:

- They offer free version of the tool, which allows anyone to use them. All you need is a Google account.

- They are quite easy to navigate and thus even if you are a beginner you will have no problems in using them.

- They offer the data to help you with website usability improvement.

- The possibility to link to other Google services (such as Google AdWords), to create comprehensive reports in order to analyze and improve the performance of the strategy.

Google Search Console

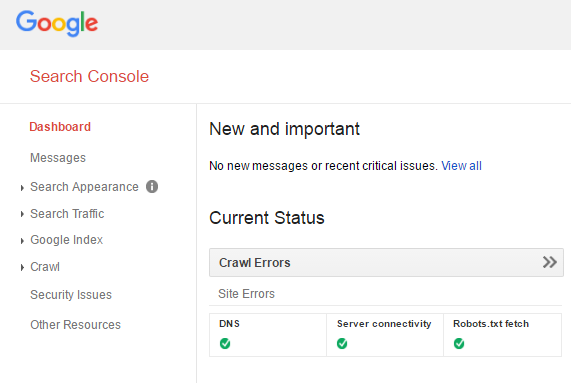

This tool is a must-have tool for all webmasters, as it helps you track and analyze the overall performance of the website, crawl errors, structured data, internal links, etc. You can access Google Webmaster tools for free using a Google account. You will have to add your website to Google Search Console account in order to get the data about the website.

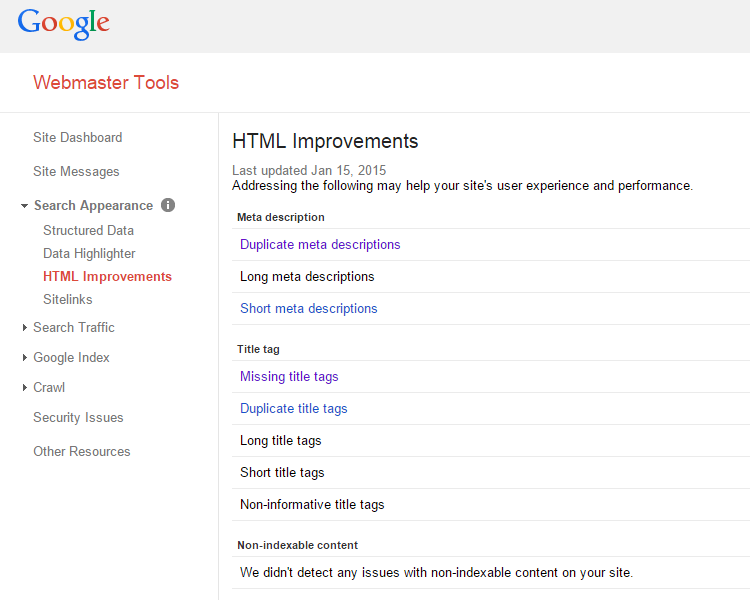

In addition, the tool provides suggestions on how to improve your website in terms of HTML improvements, which is extremely helpful when it comes to optimizing your website.

In addition, the tool provides suggestions on how to improve your website in terms of HTML improvements, which is extremely helpful when it comes to optimizing your website.

Some of the common HTML improvements include:

- Missing meta description

- Duplicate meta description

- Too long or too short meta description

- Missing title tag

- Duplicate title tag

- Too long or too short title tag

- Non-indexable content

Google Analytics

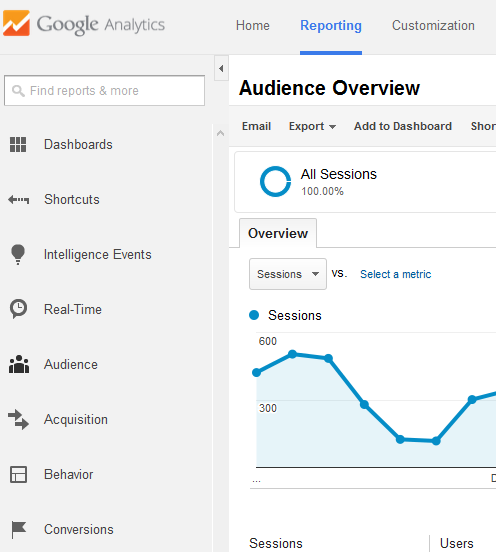

This tool is used for tracking the performance of the website, behavior of the visitors, traffic sources, etc. Google Analytics offers a great amount of data about your website, which can help you learn about who visits your website (audience), how they arrive to your website (acquisition), how they spend time on your website and how they interact (behavior). You can also monitor the activity in real time and analyze the conversions by setting up goals or linking to Google AdWords.

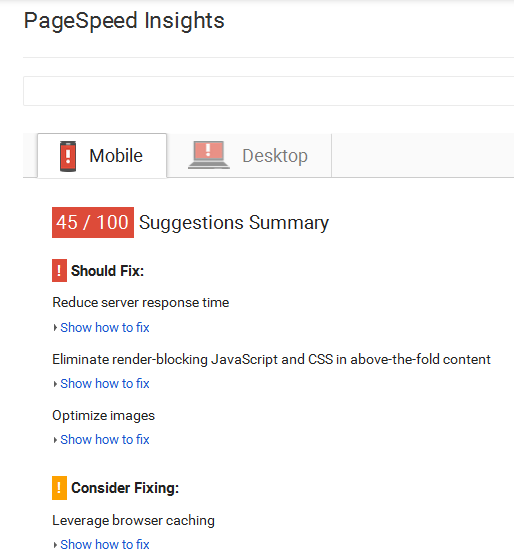

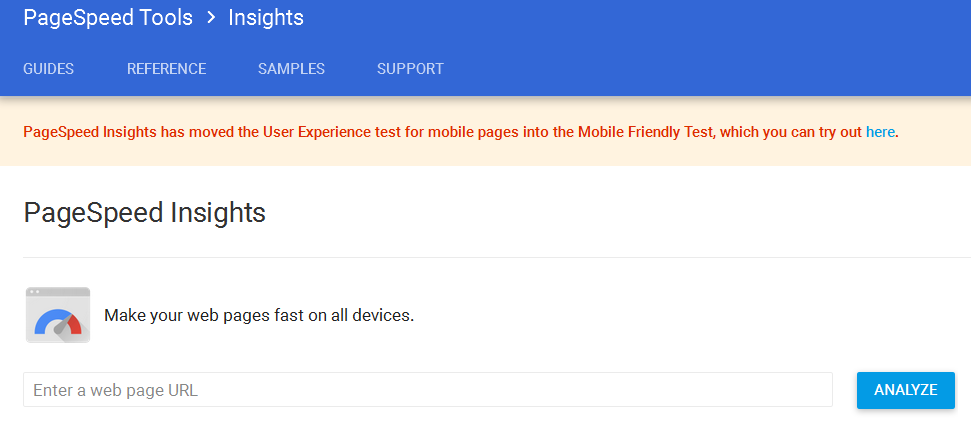

PageSpeed Insights

Since loading time of the website is an important factor that affect ranking, you should learn about your website’s speed and try to improve it. Use PageSpeed Insights for this purpose.

When you analyze the URL, you will see suggestions related to optimization of different parts of your website, which affect site speed. Those are:

When you analyze the URL, you will see suggestions related to optimization of different parts of your website, which affect site speed. Those are:

- Optimization of images

- Server response time

- Elimination of render-blocking JavaScript or CSS

- Browser cashing

- Avoid redirects

- Enabling compressions

- Prioritizing visible content

This tool also provides suggestions on how to fix these elements.