4. Search Engine Friendly Site Design and Development

Before we begin to talk about site design and development that is search engine friendly, it is crucial to remember one thing. Websites do not look the same to us, as they do to search engines. Content that may be amazing and important in our eyes, might be meaningless or not even interpretable for a search engine. And this has to do with something we spoke about earlier, spiders and indexing. Some content is better when it comes to being indexed and being understood by spiders, some content simply is not.

Indexable content

Although web-crawling has become quite a sophisticated task, it has not gotten far enough to view the web through human eyes and needs to be helped along the way. So all design and development needs to be done keeping in mind what the World Wide Web looks like to search engines. We need to look at the world through the eyes of a robot or software, in order to create websites that are search engine friendly and have long-term optimization built into them, rather than relying on a few tweaks here and a few keywords there, which will only help your SEO for a few weeks or months, maximum.

It is crucial to remember that despite how advanced spiders may have become, they are still only good at picking up on content that is mostly HTML and XHTML, so the elements of your website that you think are most important have to be in the HTML code. Java applets, Flash files or images are not read very well by spiders or understood for that matter. So, you could have a great website designed, with its most amazing content outside the HTML code and a search engine will not pick it up because what the spiders are looking at is the HTML text.

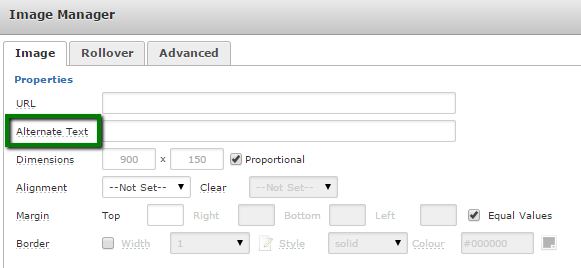

However, there are a few things you can do to make images, videos or fancy Java applets on your site become somewhat involved in the indexing business, or become indexable content. First of all, if you have any images you have in JPG, GIF or PNG format that you want spiders to notice and index, you will need to include them in the HTML by assigning them alt attributes and giving the search engine a description of the image.

Secondly, you can still make the content found in Flash or Java plug-ins count by providing text on the page that explains what the content is about.

Secondly, you can still make the content found in Flash or Java plug-ins count by providing text on the page that explains what the content is about.

Thirdly, if you have video or audio content on your website that you think matters and should be indexed, content that you think users should be able to find, you need to provide the search engine a transcript of the phrases to go along with that audio/video content.

Remember, if a spider cannot read it, the search engine will not be feeding it to users. Content needs to be indexable if it is to be visible to a search engine and then appear on a search engine. There are tools that you can use like SEO-browser.com or Google’s cache to look at website through the eyes of a search engine and see what it looks like to a spider. It helps you understand what you might be doing wrong and what others might be doing right when it comes to being noticed by search engines.

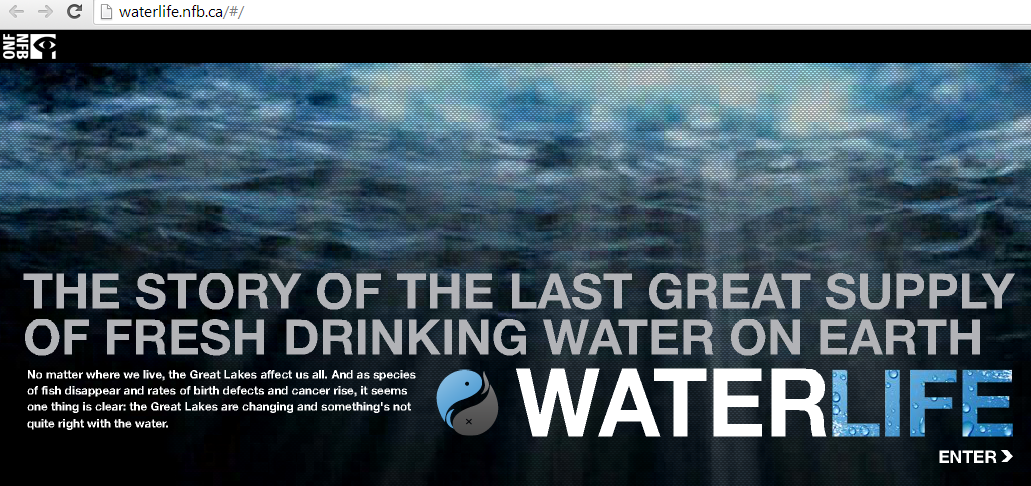

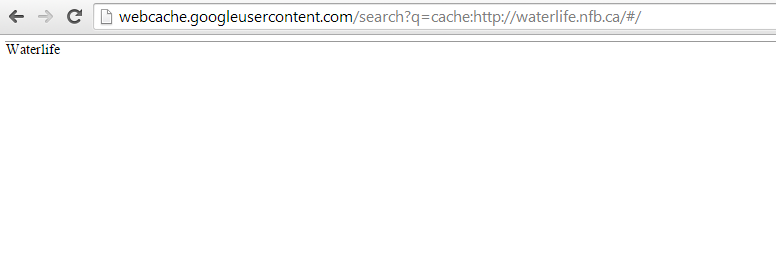

One of the ways to see the content through the eyes of a search engine is to access the cashed version of website. You should type the following in the address bar of your browser. Do not forget to replace the word “example” with the URL of the website.

http://webcache.googleusercontent.com/search?q=cache:http://example.com/For example, if your website is built using Java, you will see something like this:

But that is not what the search engines see. Using the text copy of the cashed version of the website, you see that the same website is seen quite differently by the search engines. In fact, search engines cannot see anything on this website apart from the title “Waterlife”.

But that is not what the search engines see. Using the text copy of the cashed version of the website, you see that the same website is seen quite differently by the search engines. In fact, search engines cannot see anything on this website apart from the title “Waterlife”.

Title tags

A title tag (also called title element, technically) is one of the most important things you give to a search engine spider and its algorithm. It should ideally be a brief and precise description of what your website is all about and the content that a user can hope to find.

Title tags are not just important for search engines but also for the benefit of users. There are three dimensions in which a title tag generates value and these are relevancy, browsing and the results that search engines produce.

Typically, search engines are only interested in the first 50-65 words of your title tag, so the first thing you will need to be careful about is the length of your title tag. Remember, title tags are not an introduction to your website, they are a summary responsible for attracting users and helping search engines understand how relevant your website is in relation to keywords found in the queries users make on search engines. If you are involved with multiple keywords then you might need to stretch the limit to pursue better rankings and it would be better to go longer.

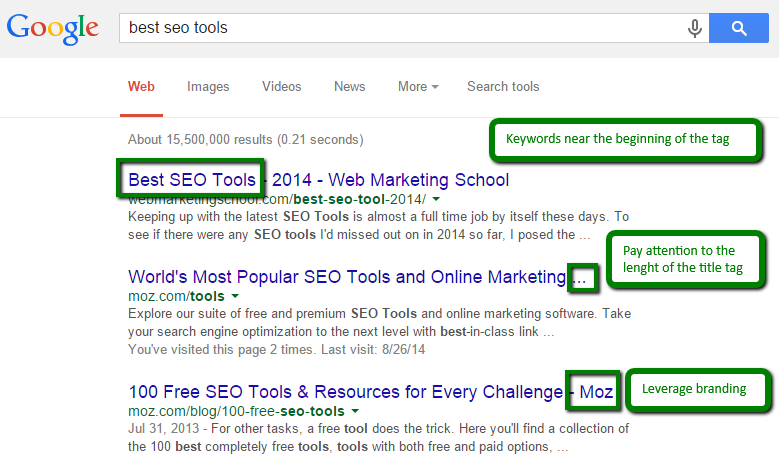

Another little tweak to help you with rankings is to try and get your important keywords as near to the start or opening of the title tag as possible. It will increase the chances of users actually clicking through to your website when they see your page listed in search results.

In a survey conducted with SEO industry leaders, 94% of the participants shared that the title tag was the best place to insert keywords in order to pursue higher rankings on search engines. That is why putting emphasis on coming up with creative, descriptive, relevant and keyword-rich title tags is so important. They are the soul of your site expressed in a sentence or two, and quite possibly the only representation that most users will have of your website unless they actually visit it.

One thing you can do to increase the possibility of better rankings through your title tag is use something called leverage branding. What you do is, you try to use a brand name at the end of your title tag. This will translate into better click-through rates with individuals who already know that brand or relate to it. You can experiment with title tags using the following tentative structure:

Primary Keyword -> Secondary Keyword -> Brand name / end

It is good to view how your title tag will appear in different online situations, such as when someone posts the URL to your website on a social media site like Facebook or when your name appears on different search engines. Search engines will automatically make the keywords which match those in the query appear in bold. So it may be a good idea to use your keywords carefully. You should not depend only on one, but you should have two or three keywords that you can rely on for higher rankings.

It is good to view how your title tag will appear in different online situations, such as when someone posts the URL to your website on a social media site like Facebook or when your name appears on different search engines. Search engines will automatically make the keywords which match those in the query appear in bold. So it may be a good idea to use your keywords carefully. You should not depend only on one, but you should have two or three keywords that you can rely on for higher rankings.

Meta tags

Meta tags give descriptions, instructions and provide information to search engines and a whole host of other clients regarding a webpage. They are part of the HTML or XHTML code of a website and the head section. There are many types of meta tags and they all have their uses depending on the meta tag, from controlling the activity of search engine spiders to telling a search engine that the design of your website is mobile-friendly.

Not all search engines will understand all meta tags, for example, Google will ignore the meta tags it does not understand and only recognize the ones it does. It serves little or no purpose to focus on putting keywords in the meta data and expecting it to yield better rankings. Below is a list of some useful meta tags and what you can do with them.

‘Spider tags’

The robots meta tag can be employed to influence the activity of internet spiders on a page-to-page basis.

Index/noindex will inform the search engine whether or not to index a certain page. You usually do not need to tell a search engine to index every page, so the noindex option is the only one you will probably be using.

Follow/nofollow will let an engine to follow or not to follow a certain page when it crawls and thus disregard it and the links found on that page for ranking.

Eg :Noarchive lets engine know that you do not wish for it to save a cache copy of a certain page.

Nosnippet will tell the engine not to display a description with a page’s URL and title when it shows up on the search results page.

Noodp/noydir will instruct an engine to refrain from picking up a description snippet for the page from either the Open Directory Project or Yahoo! Directory when the page comes up in search results.

The description meta tag (or meta description)

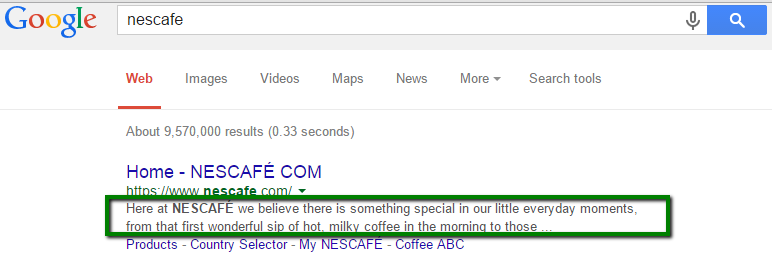

This is a short description of your web page that lets a search engine know what the page is about and it will, at times, appear as a snippet beneath your site title on a search engine’s results page. Keywords found in the metadata will usually not influence rankings. It is best to not exceed the 160 characters limit in your meta description as it will be cut off in display and the W3C guidelines recommend the same length.

It is a good idea to find out which meta tags different search engines understand and what meta elements they ignore, so you do not waste your time thinking you have optimized in the meta area while actually being ignored by the search engine. You can find a list of meta tags that Google recognizes online. Yahoo! and Bing have a similar document for webmasters.

It is a good idea to find out which meta tags different search engines understand and what meta elements they ignore, so you do not waste your time thinking you have optimized in the meta area while actually being ignored by the search engine. You can find a list of meta tags that Google recognizes online. Yahoo! and Bing have a similar document for webmasters.

Meta tags that Google understands:

https://support.google.com/webmasters/answer/79812?hl=en

Yahoo! understands the following tags:

https://help.yahoo.com/kb/yahoo-web-hosting/create-meta-tags-sln21496.html

Bing explains the most important tags in the SEO segment of the Webmaster Guidelines:

http://www.bing.com/webmaster/help/webmaster-guidelines-30fba23a

Link structure

It is important for search engine spiders to be able to crawl your website smoothly and find their way on to all the links that your site contains. This requires for your website to have a link structure that is search engine friendly and carved out keeping spiders in mind. If there are no direct and crawlable links pointing to certain pages in your website, they might as well not exist no matter what great content they may contain, since they are unreachable by spiders. It is surprising how many websites with great content make the mistake of having a link structure with navigation that makes it hard, if not impossible, to get parts of their website to appear on search engines.

There are many reasons why certain parts of a website or pages might not be reachable by search engines. Let us discuss some common reasons.

Firstly, if you have content on your website that is accessible only after submitting certain forms, the content on those pages will not be accessible to spiders most likely, and thus not reachable for search engines. Whether your form requires a user to login with a password, fill in a few details, or answer some questions, spiders will be cut off from any content found behind those forms since they usually do not make an effort to submit forms. That content becomes invisible.

Secondly, spiders are not very good at crawling pages with links in Java and pay even lesser attention to the links embedded within such a page. You should try to use HTML instead or accompany it with Java wherever possible. The same goes for links that are in Flash or other plug-ins. Even if they are embedded on the main page, a spider might not pick them up through the site’s link structure and they may remain invisible because they are not HTML links.

Thirdly, the robots meta tag and the robots.txt file are both used to influence the activity of spiders and restrict it. Make sure that only the pages you wish for spiders to disregard have directives for spiders to do so. Unintentional tagging has caused the demise of many fine pages in the experience of webmasters as a community.

Fourthly, another common reason for a broken link structure for spiders are search forms. You may have millions of pages of content hidden behind a search form on your website, and it would all be invisible for a search engine since spiders do not conduct searches when they are crawling. You will need to link such content to an indexed page, so that the content can be found during web crawling.

Fifth, try to avoid making links in frames or iFrames unless you have a sound technical understanding of how search engines index and follow links in frames. Although technically they are crawlable, they pose structural problems and those relating to organization.

Lastly, links can be ignored or have poor accessibility if they are found on pages with hundreds of them, as spiders will only crawl and index a given amount to safeguard against spam and protect rankings. It is best to make sure that your important links are found on clean and organized pages and knit into a clear structure that is easy for spiders to follow and index.

Nofollow links

There is a lot to be said about the debate over nofollow links and it can be confusing just understanding what they actually are.

Let us start by looking at what normal ‘follow’ links are. When someone follows a link to your website or a page, that page gets a bit of something SEO professionals call link juice. You can also imagine points given to the link. The more inbound links you get, the more SEO points you get. Search engines interpret this as a good thing, because they figure if lots of people are linking to your site then it must be of value and thus, that page will be given preference by the search engine in its results.

The thing about link juice is that like real juice, it flows. It is transferred from site to site. So for example if National Geographic has a link to your page on their website, that means a lot more link juice or points than if some small blog mentioned your URL with a hyperlink. National Geographic, being a wildly popular internet destination, has obviously more link juice and Page Rank.

Now this is where nofollow links come in and why they have become so important in the world of SEO and getting better rankings. A nofollow link does not add points to your page or count for that matter; they are treated like the losers of the link world in terms of SEO points.

A typical nofollow link is a tag that looks like this:

Example Website! While search engines mostly ignore any attributes you apply to links, they generally are responsive to the exception of the rel=”nofollow” tag. Nofollow links direct search engines not to follow certain links, although some still do. It also gives out the message that this page should not be read as normal.

Nofollow links started out as a method for avoiding automatically generated spam, blog entries and other forms of link injection, but over time it has become a tool for letting search engines know not to transfer any link value and disregard certain pages. When you put the nofollow tag on a link it will be read differently by search engines and treated differently than the other pages, which are followed links.

Having a little or even a good amount of nofollow links to your website is not necessarily a bad thing, as many popular or highly ranked websites will generally have a larger number of inbound nofollow links than their less popular and lower ranked colleagues. It is all part of having a large portfolio of links, that make up your website.

Google confirms that it does not follow nofollow links in a majority of the cases and they do not allow them to transfer Page Rank or anchor text. Nofollow links are disregarded in the graph of the web that the search engine maintains for itself. They are also said to carry no weight and are thought of as just HTML text and nothing more. However, a lot of webmasters believe that search engines regard nofollow links from high authority websites as signs of trust.

While nofollow links might seem like bad news for link building if they are linked to your site, this does not have to be the case. Nofollow links still build awareness for your website, as users will still get to see the name of your site and even click on the link. The only difference is that you will not be getting better rankings, as a result of the nofollow link.

As a webmaster, nofollow links are of immense use to you too because there will be pages that you want a search engine to disregard and not follow. They are also of immense use on paid links, comments, forums and anywhere you can expect spamming or pages that are little visited because they might bring down the performance of your site overall.

It is good to have a diverse link profile of backlinks that is made up of follow links and nofollow links both. Although follow links will definitely do more for your SEO, nofollow links have their uses and can be instrumental in avoiding spam and having unnecessary pages indexed by spiders.

Keyword usage

Keywords are perhaps the most important element in a search activity. They are the basic unit of a query, and indexing and retrieval of information would be impossible without them. Just imagine if there were 25 different words used for car tires. It would be a nightmare trying to get any traffic to your website from search engines, because you would be missing out on a majority of users interested in car tires, just because you did not have the right word to match the keywords they used for car tires.

Search engines index the web as they crawl, using keywords to form indices. Every keyword will have millions of pages to only thousands (depending on the word) which are all relevant to that keyword. If you think of Google or Bing as one huge palace and websites as people, then the keywords are the separate rooms with tags on their door and it is the job of spiders to put relevant people in the most relevant rooms. Websites with more relevant and identifiable keywords get to stay in more than one room of the palace – the more popular the keyword, the bigger its bedroom and thus, the more people in it.

Search engines make use of many small databases based on keywords rather than one huge database that contains everything. This makes sense, just as products are listed in aisles in a supermarket, which makes it easier to find what you need and have a look around. Imagine if there were not any aisles and items were placed without classification and order, it would be a nightmare just buying a toothbrush and a watermelon, because of the size of the supermarket and endless categories of products available.

It goes without saying that whatever content you wish to serve as keywords or to be identifiers for your website, what you want to be recognized for on the internet, you will need to confirm that such content is indexable and that it has been indexed by a search engine.

When considering keywords, it is best to remember that the more specific your keyword, the better the chances you have of coming up higher in a results page when someone types it into their query. For example, ‘dog’ is a very general and popular keyword and one for which you will have considerable competition. Even established and popular websites with a wide user base have problems staying on top with general keywords.

So if you are a small fish in a big online sea your chances of attracting attention are very low if you are stuck with general keywords with a lot of competition. However, carrying the above mentioned example forward, if you made your keyword more specific, say, something like ‘pedigree dogs Budapest’, you have come into a keyword field which is much more relevant to you, where there is lesser competition. Here, you actually have chances of getting into the top of the page or at least high up in the results.

Now you will be more accessible to the users who are interested in exactly the content that you have to offer. Remember, it is always better to aim for being higher up in a more specific or narrow keyword than to settle for lower rankings for a more popular keyword.

Search engines pay much more attention to your title tag, anchor text, meta tag description and the first 200 words of your webpage. So it is crucial to SEO that you use your keywords as much as possible but with maturity in these four areas. Be careful about overuse. There is nothing more obnoxious than a website that is stuffed with keywords unnecessarily and without elegance. Overkill when it comes to keywords will actually work against you and defeat the purpose, which was to win the trust of users and search engines.

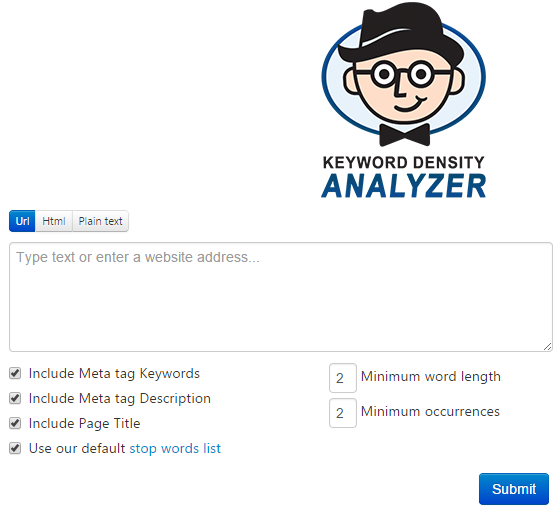

It appears unprofessional, makes your website seem without real value, and resembles spam because it gives the impression to search engines and users alike, that you are somehow trying to trick the system into paying attention to your page. Think of yourself as a user – do you trust websites or even like websites that seem to jump at the excuse of using a keyword repeatedly? Or are you more likely to disregard such a website as lacking in content value? You can keep track of keyword density through tools available online that measure the way keywords are spread out across your page.

Remember, if you choose your keywords properly and honestly, you will not need to stuff your content with them, as they will appear naturally in the text to a necessary degree because they are actually relevant to your website and not just something you picked up for rankings or because it was trending on the internet. Find the closest and most popular keyword that is relevant to your website. You can get away with a little cleverness when it comes to the use of popular or strategic keywords, but that is only if you already have a keyword that is relevant to your website and has been chosen honestly, keeping the benefit of the user in mind.

URL structures

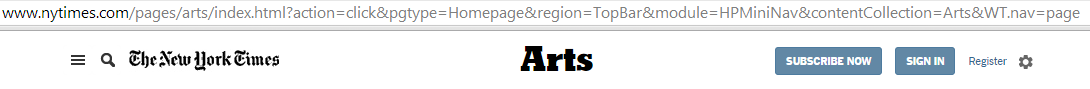

Although not seen to be as important any more, a URL can actually influence rankings for websites in major ways and the way users experience a website and use it. The first rule is that if looking at your URL, a user can predict correctly what content they might find on your website, you have gotten it right. A URL with too much cryptic text and stated parameters or numbers seems intimidating and unfriendly to users. That is why it is best to avoid oddly worded URLs, with few recognizable words or words that are irrelevant.

Try to make your URL seem friendly.

The second thing is to aim for keeping your URL as short as possible, not just for your main page but for the pages that spread out from your main page. This makes it easier for users to type, remember and copy/paste your URL into social media sites, forums, emails, blogs, etc. It also means that your URL will be showing up whole on the search results page.

Thirdly, try to get any relevant keywords from your website into your URL as well, as this can help your rankings substantially and also help you gain more trust and popularity with users. But trying to lace it with as many keywords as possible is also dangerous and will actually defeat the purpose and work against you. So beware of overuse.

Fourthly, and this is very important, always prefer static URLs over dynamic ones so you can make them more readable for people and somewhat self-explanatory. Links, which contain a lot of numbers, hyphens and technical terms do not do well with users, affect your ranking and interfere with indexing by spiders.

Lastly, it is best to use hyphens (-) to separate words in your URL as opposed to underscores ( _ ), the plus sign (+) or space (%20) for separation, since all web apps do not interpret these symbols properly in comparison to the classic hyphen or dash.

A clean and friendly URL is not just good for your relationship with search engines and helping them crawl better, it is also good for the way users experience your website. Avoid using generic words in your URL like ‘page_3.html’, putting in unnecessary parameters or session IDs, and using capitalization where it is not needed. It is also best to avoid having multiple URLs for the same page, as this will distribute the reputation for the content found on that page amongst the different URLs. You can fix this by using the old 301 direct or rel=”canonical” link element where you cannot redirect.

Remember, URL structures neaten up the look and feel of your website and affect the way both users and search engines experience your website. Some URLs are more crawlable than others and some are more friendly looking and convenient for users than others.

Mobile-friendly website

In the year 2016, the number of mobile user has surpassed the number of desktop users. This has been anticipated for a while, and the experts in website optimization have suggested the importance of mobile optimization for the past few years. Google’s updates also introduced mobile-friendliness as a ranking factor and the company also announced that mobile-friendly websites are given preference in the search results.

Mobile-friendly website is designed in a format that loads quickly, provides mobile-friendly navigation, and touch-friendly buttons. Such website has built in functionality that allows it to adjust its size to the smaller screen, adjusting both the layout and the content format.

How to know if your website is mobile-friendly? Use Google’s Mobile-Friendly Test to check if the website is mobile-friendly. You could also get suggestions on how to improve user experience from mobile traffic using Google’s Page Insights.